Supercharged your local development using AI (For Free)

As a developer, you're constantly looking for ways to streamline your workflow, reduce errors, and increase productivity. One way to achieve this is by leveraging the power of Artificial Intelligence (AI) in your local development environment. In this blog post, we'll explore how AI can supercharge your local development and take your coding skills to the next level.

Github Copilot Free Alternative

Everyone in this universe must know about GitHub Copilot, the most popular coding assistant backed by Open AI codex, and ChatGPT is the most popular LLM (Large Language Model), nowadays all people around the world use ChatGPT to speed up their work and software engineers use Coding Assistant to help them with their day-to-day task, but as you might know good coding assistant is not FREE.

You need to pay 10$/month, here's I will show you how you can get FREE coding assistance as well as free personal ChatGPT with your own use case + fully customizable.

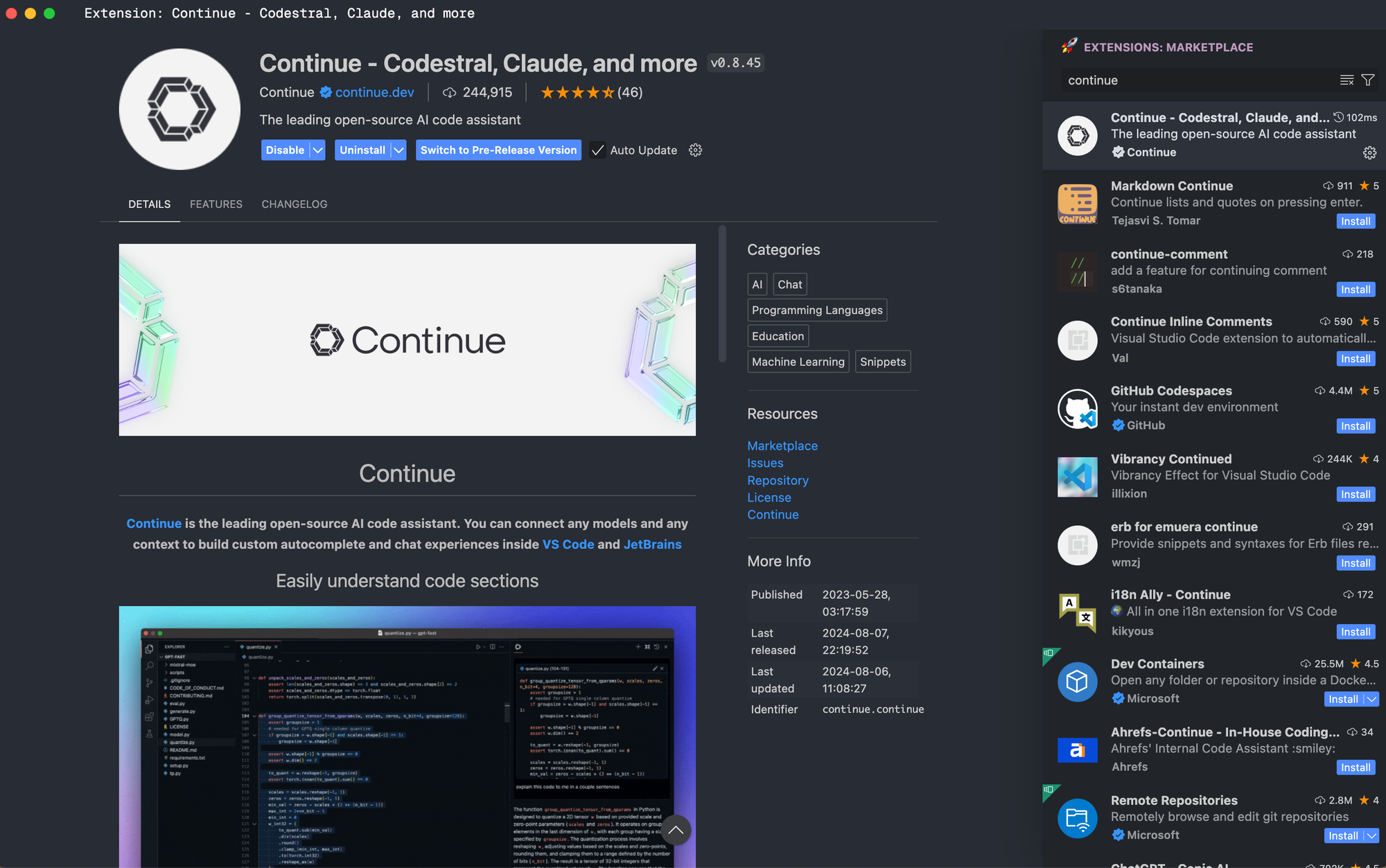

Introducing Continue.dev

Continue is the leading open-source AI code assistant. You can connect any models and any context to build custom autocomplete and chat experiences inside VS Code and JetBrains.

Note: If you already know about the LLMs or want to jump straight to the tutorial on how to set them up, please continue to setup section.

What is a LLM's model?

LLM stands for Large Language Model, A type of artificial intelligence (AI) model that is trained on vast amounts of text data to generate human-like language, short example ChatGPT, Claude AI, and Google Gemini are LLM, but this type of LLM called Closed Source, meaning that we need to pay to use it. Even though they give us a free tier. Yes as you assuming right now, we do have open-source LLM which is free to use forever.

Open Source LLM

Actually, there is no open-source LLM, it is called open-weight but most people call it open-source for easy to memorize.

How many open-weight LLMs the in the market right now?

There is major competition in the AI industry and each of them has its own use case, advantages, and disadvantages.

Here's the most popular LLM that people use nowadays:

- LLama 3 By Meta (formerly known as Facebook)

- Mistral By MistralAI

- Gemma By Google

- Qwen By Alibaba

and so on...

What do we need to do with this open-weight LLM? Why i should use it? ChatGPT free is enough right?

Absolutely!, yes you can use ChatGPT as many as you like but it will come with some limitations. API is the one that we needed later to set up our own LLM, to use it you need to pay for it.

And What about code completion or auto-complete like Copilot? How do we get it free?

In this article, we will set up fully free code completion using one of the most popular LLM models from Mistral.Ai Codestral.

Why Codestral ?

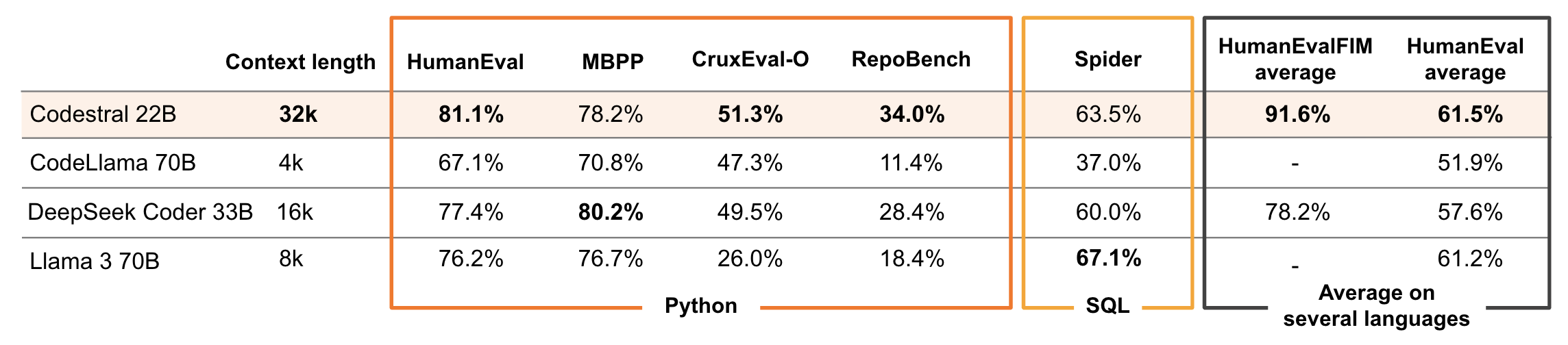

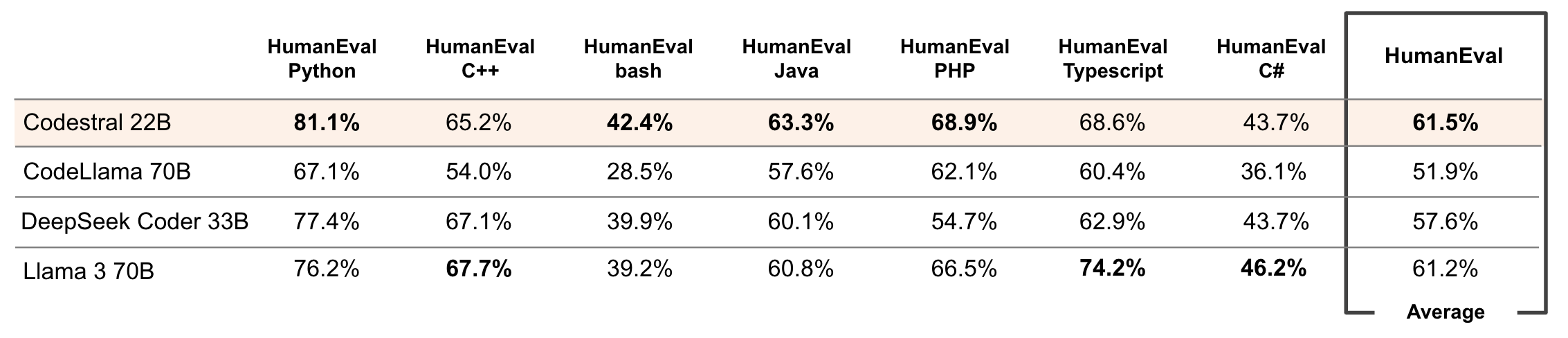

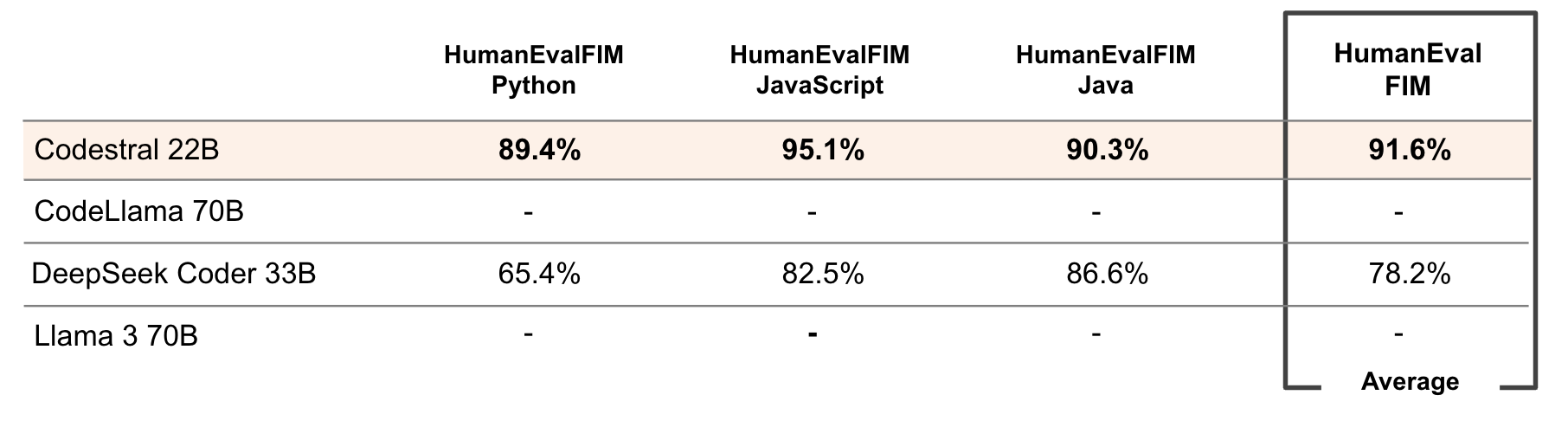

Well, it's a personal choice there are other free models that you can use instead of codestral, but here's the benchmark that may give you a better overview of codestral.

So what is different between Codestral and Mistral? or ChatGPT and Open AI Codex ?

Yes, both are from the same provider for example (Codestral and Mistral by Mistral.AI) You can call it for general purposes LLM are you can have a conversation like a chatGPT but Codex and Codestral are designed for coding and code generation.

Time to choose LLM models for personal ChatGPT like

There are a lot of options for these, but I will give my opinion on what and why I use these models.

Claude Haiku by Anthropic

I use these models to ask for more general and newest information related to the internet due to some open-weight limitations with their current knowledge. And i use Haiku models to save me some money 🥲. If you OpenAI / ChatGPT fanboy you can use GPT4o-mini.

Mixtral by MistralAI

Due to their different architecture (MoE), I use these models to solve complex algorithm problems.

LLaMa by Meta (Optional, but recommended for most use cases)

Most people use this model due to free and has the ability same as ChatGPT*

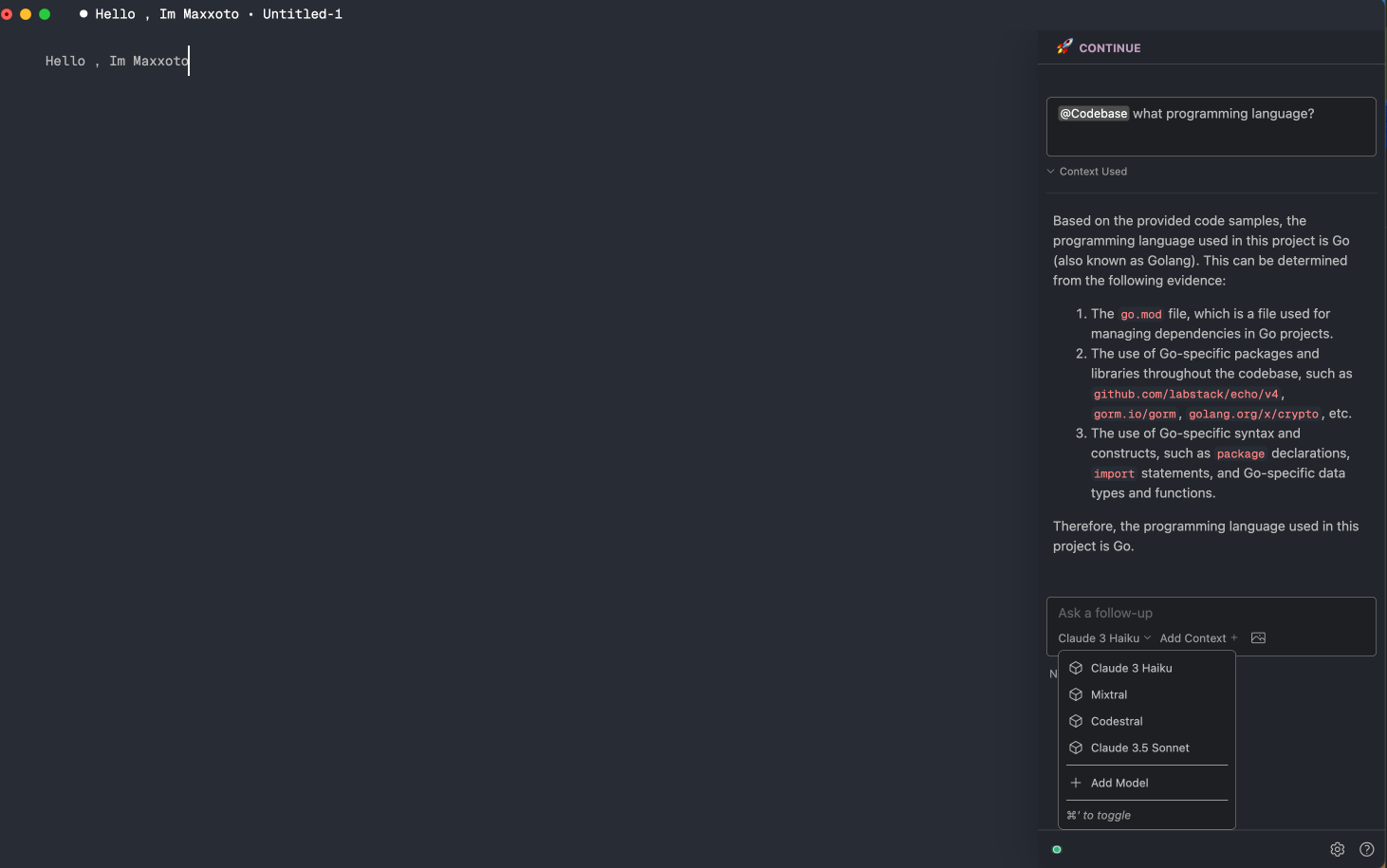

And here's the overview of my VSCode using Continue.dev as AI Assistant.

Which provider do I need to use? Local?

You can host these models on your own server or locally(except Claude), but it will make extra work. Thank god we can use these models without setup and the more important thing is FREE! 😆 .

We will use Groq as our provider to get our coding assistance running in our local with VSCode as code editors.

Enough talk, Let's setup the coding assistant in our VSCode

1. Install VSCode (if not installed yet)

Visit these sites to learn how to install it.

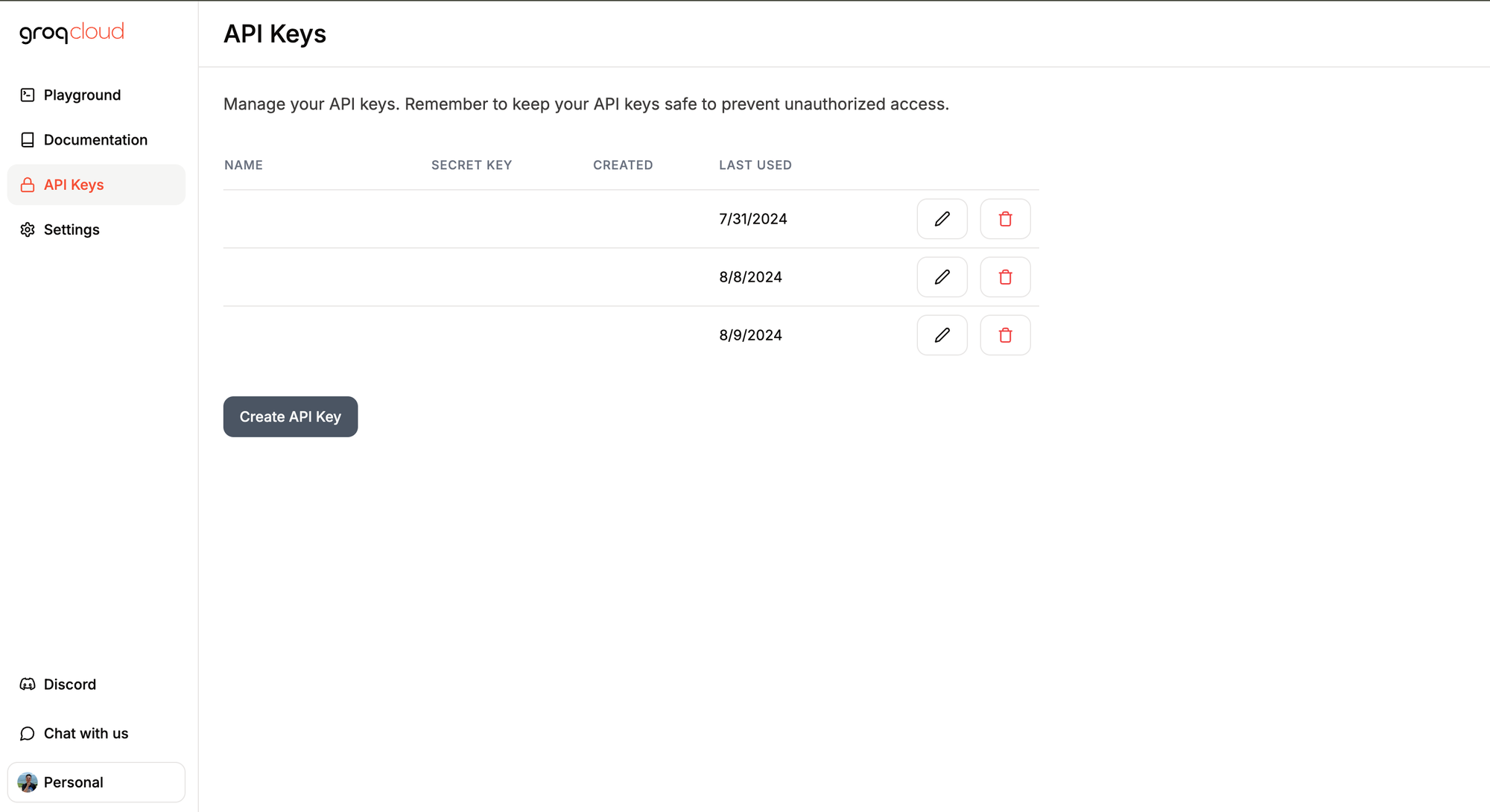

2. Get API keys from Groq

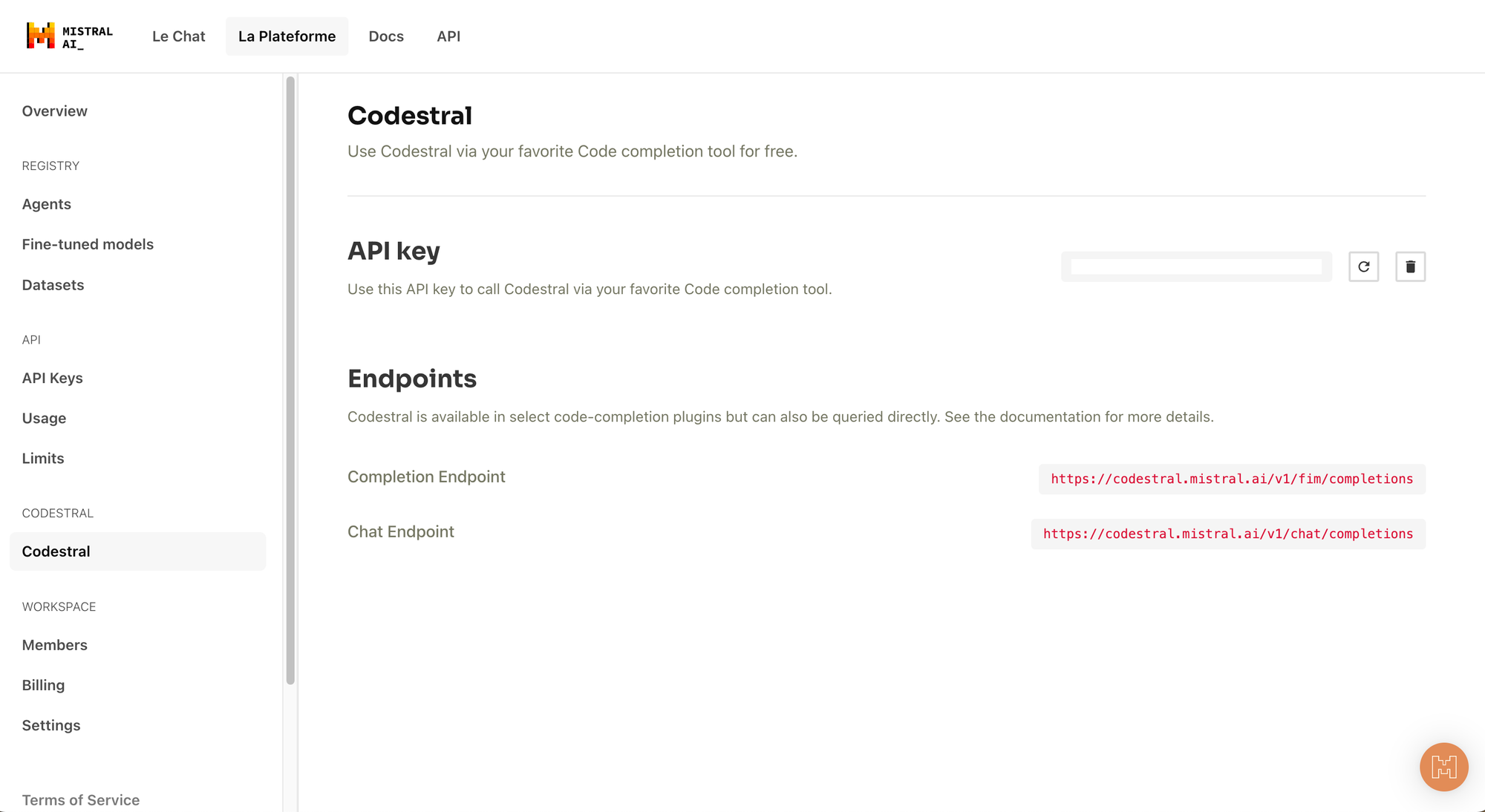

3. Get Codestral API keys

4. Install Continue.Dev extensions

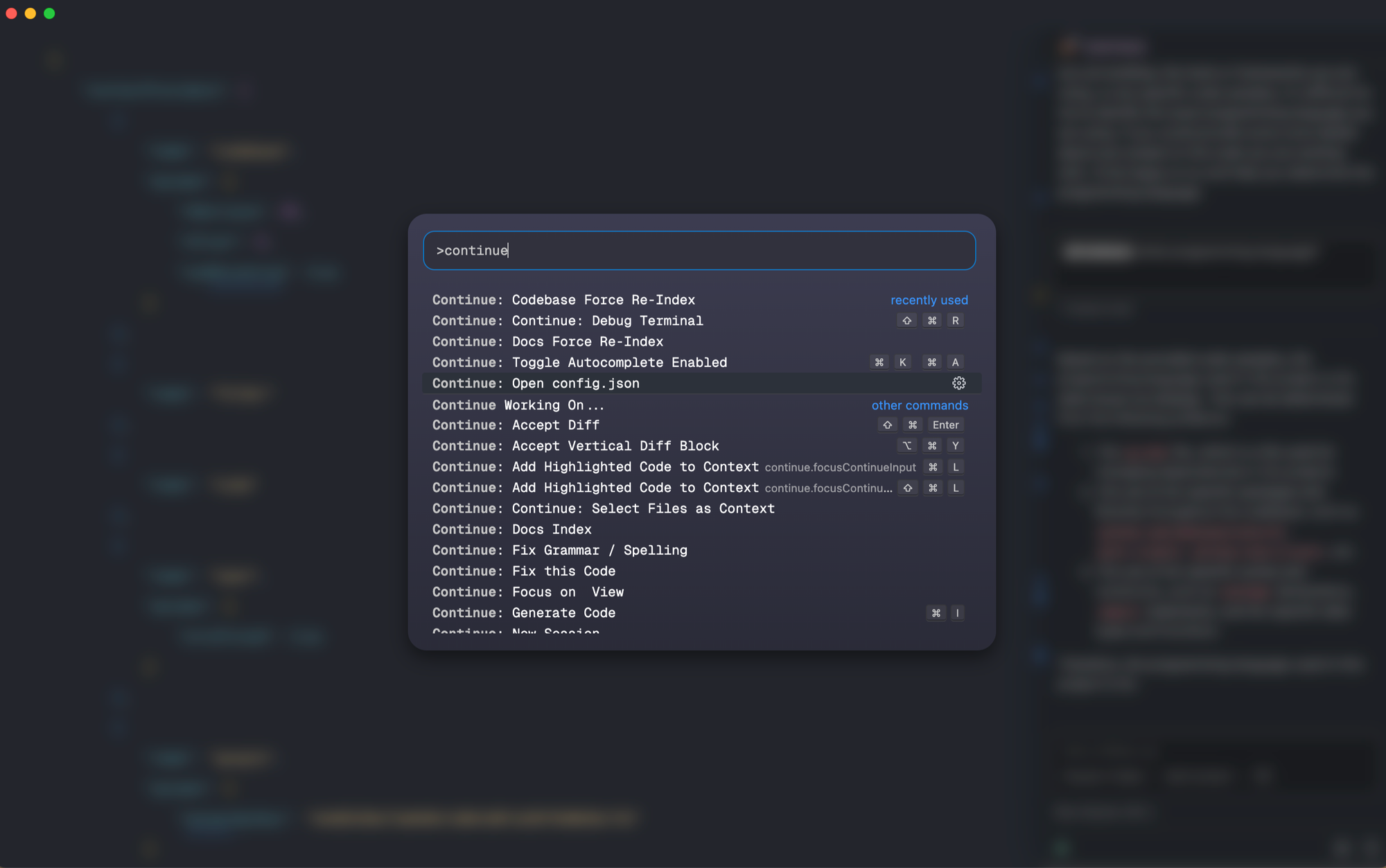

5. Open config.json

Setup your models and code completion API keys

{

"models": [

{

"model": "claude-3-haiku-20240307",

"contextLength": 200000,

"title": "Claude 3 Haiku",

"apiKey": "YOUR CLAUDE API HERE",

"provider": "anthropic"

},

{

"title": "Mixtral",

"model": "mistral-8x7b",

"contextLength": 4096,

"apiKey": "YOUR GROQ API HERE",

"provider": "groq"

},

],

"tabAutocompleteModel": {

"title": "Codestral",

"provider": "mistral",

"model": "codestral-latest",

"apiKey": "YOUR CODESTRAL API HERE"

},

//Use local embed

"embeddingsProvider": {

"provider": "transformers.js"

},

"allowAnonymousTelemetry": true

}

Configure your context provider for better code suggestion and retrieval.

{

"contextProviders": [

{"name": "codebase"},

{"name": "folder"},

{"name": "code"},

{

"name": "open",

"params": {

"onlyPinned": true

}

},

{

"name": "docs",

"params": {

"sites": [

{

"title": "Golang Docs",

"startUrl": "https://go.dev/doc/",

"rootUrl": "https://go.dev/doc/",

"favicon": "https://go.dev/favicon.ico"

}

//Add more docs here

]

}

},

],

}6. Open your project as folder

7. Happy Coding ❤️

Bonus

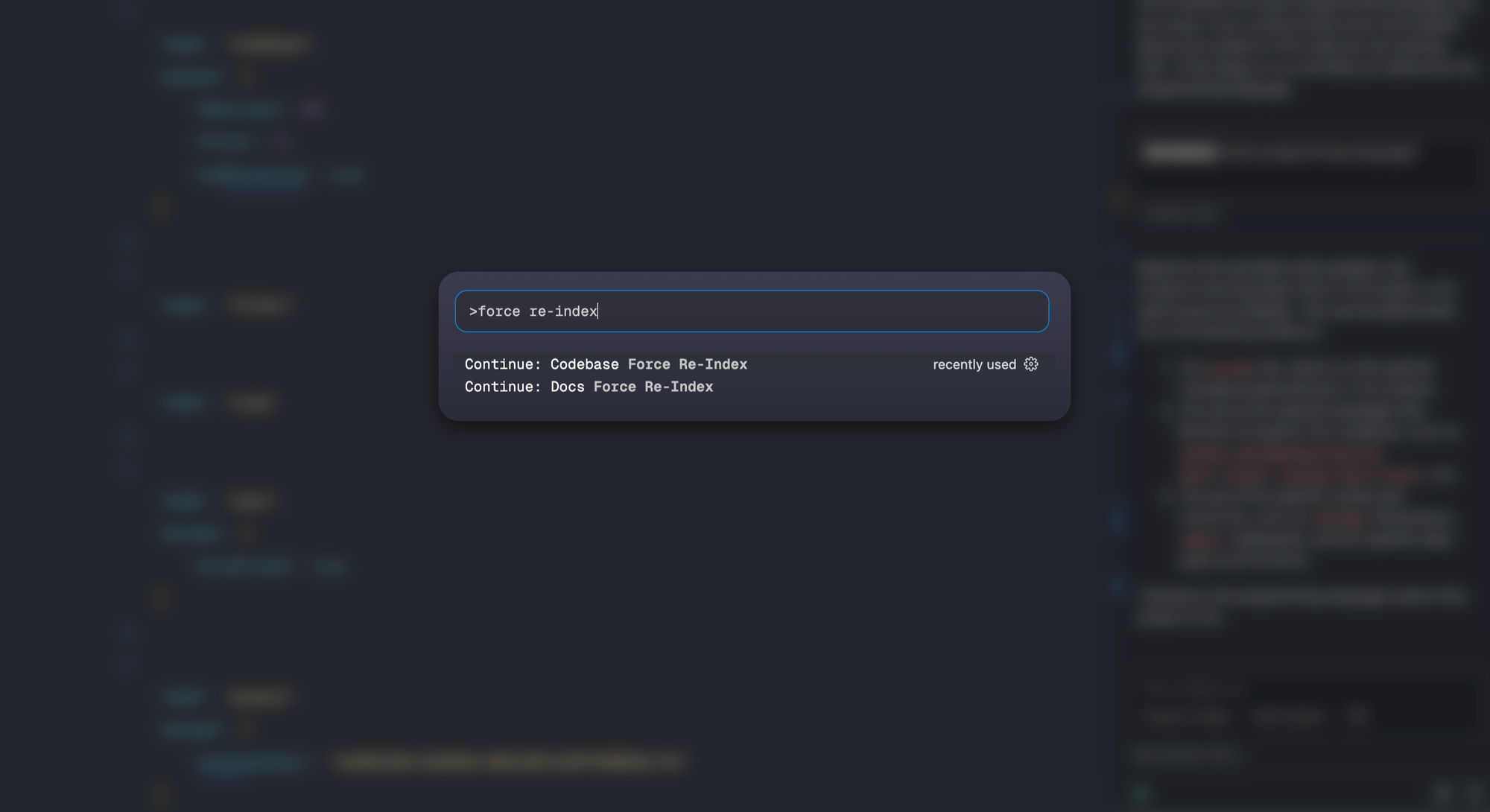

- If the chat not works properly by using your own codebases, you need to force re-index the codebase

- Codebase context provider not working when you're using vscode workspace, (for now and its based on my machine)

Whats Next ?....

Continue.dev with Advanced Configuration using Reranker and Slash Command

Thanks for reading , please reach me if you have any suggestion at hi@maxxoto.dev